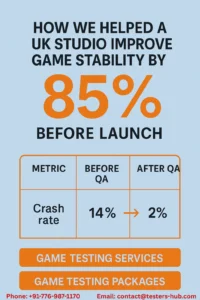

How We Helped a UK Studio Improve Game Stability by 85% Before Launch

Introduction: Why Game Stability Can Make or Break a Launch

In today’s gaming market, performance issues can ruin even the most beautifully designed title. Players expect smooth gameplay, quick load times, and zero crashes—especially before a public release. When a game fails to meet those expectations, negative reviews and uninstalls follow almost instantly.

That’s exactly the challenge one UK-based studio faced before releasing its action-adventure RPG for mobile and PC. The developers were proud of their mechanics and visuals, yet internal tests revealed unpredictable crashes and frame rate drops on several devices.

That’s where Testers HUB stepped in. Our QA experts collaborated with the client’s in-house team to stabilize gameplay across platforms and deliver a launch-ready experience.

👉 Need help stabilizing your own title?

About the Client

Our client was a mid-sized UK studio developing a cross-platform RPG aimed at casual and mid-core players. The game combined dynamic combat, story-driven missions, and real-time multiplayer features. The developers planned a global beta release within four weeks, but performance issues were already affecting internal builds.

- Target platforms: Android, iOS, and Windows PC

- Engine: Unity 3D

- Player base: Expected 100 K+ beta sign-ups

- Timeline: Four weeks before beta launch

The Challenge

Despite extensive internal testing, the QA team reported recurring problems:

- Frequent crashes on Android 12–14 and mid-range devices

- Severe FPS drops during boss fights and high-particle scenes

- Gradual memory leaks after long play sessions

- Multiplayer desynchronization when players used different network types

- Occasional stutters and overheating on specific GPUs

With the launch countdown underway, the studio needed a structured QA plan that could identify and resolve these stability issues—fast.

Our Game QA Approach

We began by establishing a dedicated QA squad consisting of senior functional testers, performance analysts, and device specialists. Collaboration was key, so our testers worked closely with the client’s dev leads to design test cycles that balanced speed with coverage.

1. Real-Device Coverage

To ensure realistic performance data, we used over 20 real devices covering major brands and chipsets:

- Android (Samsung S25 Ultra, Pixel 8, OnePlus 11, Xiaomi 13)

- iOS devices (iPhone 11 to 17 Pro)

- Windows and macOS systems for cross-platform checks

This range helped us detect chipset-specific issues early.

2. Multi-Layer Testing Approach

We executed multiple testing types simultaneously:

- Functional Testing: Validated combat, rewards, inventory, and UI flows.

- Performance Testing: Monitored FPS stability, CPU/GPU usage, and heat generation.

- Network Testing: Simulated weak Wi-Fi, 4 G, and throttled connections to identify desync.

- Compatibility Testing: Verified gameplay consistency across screen sizes, resolutions, and OS versions.

- Regression Testing: Ensured that fixes didn’t introduce new bugs in later builds.

3. Tools & Reporting

To maintain precision, we used a mix of advanced QA tools:

- Crashlytics for live-crash tracking

- GameBench and Unity Profiler for frame and memory analysis

- Android Profiler for thermal and resource monitoring

All issues were documented in Jira, complete with steps to reproduce, expected vs actual behavior, screenshots, and Loom videos for faster developer triage.

4. Prioritization & Daily Syncs

We categorized every issue by severity: Critical, Major, Minor. Daily sync meetings with the developers ensured quick turnaround times, while nightly regression passes confirmed the stability of each new build.

Results Achieved

Within three structured QA cycles, the improvements were dramatic:

Metric |

Before QA |

After QA |

Improvement |

| Crash rate | 14 % | 2 % | -85 % |

| Average FPS (mid-range Android) | 38 FPS | 60 FPS | +25 % |

| Memory usage | 1.8 GB | 1.1 GB | -40 % |

| Average session length | 21 min | 37 min | +76 % |

Additional highlights:

- Smooth performance across Android 12–15 and iOS 16–18

- Multiplayer latency tolerance improved by 30 %

- Beta version launched with 4.8★ average reviews on both stores

- Player retention during the first month rose by 30 %

The studio was so impressed that they extended our collaboration for post-launch regression testing and LiveOps QA.

Key Takeaways for Game Studios

1. Early QA Engagement Saves Time

Identifying performance bottlenecks early prevents panic fixes later. Engaging a QA team even during alpha builds can reduce bug-fix time by up to 60 %.

2. Real-Device Testing Is Non-Negotiable

Emulators can’t replicate thermal throttling, network variability, or chipset differences. Real-device testing ensures real-world stability.

3. Prioritize Regression Cycles

Every new build can break older fixes. Continuous regression cycles maintain consistency and prevent critical gameplay regressions from reappearing.

4. Communication Is Key

Clear defect reporting with visuals and severity tagging helps developers act faster, minimizing misunderstandings and development delays.

Conclusion

For this UK studio, stabilizing the game was the difference between an average launch and a highly rated success. By focusing on structured QA, real-device validation, and efficient reporting, Testers HUB helped transform a crash-prone build into a reliable, globally praised release.

Every game—whether mobile, PC, or cross-platform—benefits from proactive QA involvement. When performance issues are caught before launch, studios save time, money, and reputation.

🎮 Looking to make your next release flawless?

Next Step: Explore Our Game Testing Packages

If you want to know exactly how much coverage, device diversity, and regression support you can get within your budget, explore our flexible plans tailored for indie studios and AAA teams alike.

FAQs

Q1. How many QA cycles are ideal before launch?

We usually recommend three complete cycles—functional, performance, and regression—to catch most stability and gameplay issues.

Q2. Do you also test post-release updates?

Yes. We provide continuous LiveOps QA and hotfix validation to maintain stability after launch.

Q3. Which devices do you use for mobile game testing?

Our device lab includes 20 + real devices covering Android 10–16 and iOS 15–18 across multiple brands and chipsets.

Q4. Can small or indie studios afford your services?

Absolutely. Our pricing tiers are flexible, ensuring quality assurance fits every production scale.

Q5. How fast can you start?

We can onboard within 24–48 hours once access to the build and scope is provided.